The Art of AI-Generated OT Payloads: From Mischief to Existential Threat

Nearly 10 years ago, I managed to take control of every appliance in a 200-room hotel. In the years since, to my surprise, the number one question I was asked wasn't "How did you do it?" but rather "With the control you had, what's the worst thing you could have done?" Since the spread of AI, the answer to that question has grown significantly.

Dr. Jesus Molina

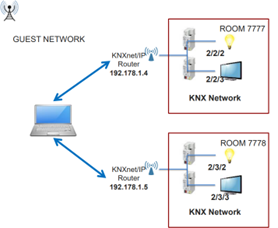

Almost 10 years ago, I managed to take control of every appliance in a 200-room hotel. I could raise the blinds in each room, change the TV channels, adjust the outside lighting, modify the temperature settings, and more. I had complete control. I did this by sending commands utilizing the KNX protocol through an unprotected wireless network at the hotel.

Almost 10 years ago, I managed to take control of every appliance in a 200-room hotel. I could raise the blinds in each room, change the TV channels, adjust the outside lighting, modify the temperature settings, and more. I had complete control. I did this by sending commands utilizing the KNX protocol through an unprotected wireless network at the hotel.

In the years since, to my surprise, the number one question I was asked wasn’t “How did you do it?” but rather “With the control you had, what’s the worst thing you could have done?” For those curious about the “how”, I documented the process in a white paper presented at the BlackHat conference in 2014, which you can access here. Let’s revisit and expand on the second question, that “what,” with and without the help of generative AI.

Almost 10 years ago, I managed to take control of every appliance in a 200-room hotel….In the years since, to my surprise, the number one question I was asked was…”With the control you had, what’s the worst thing you could have done?”

Mischief

Before the advent of modern generative AI, my response to the question “what’s the worst you could have done?” was fairly typical: I could have disabled the controllers and then demanded payment to reverse the damage. This is akin to encrypting files, denying access to them, and demanding a ransom. In fact, a similar tactic was recently employed by KNXlock, which exploited the KNX protocol’s cryptographic key insecurities to brick the KNX devices and demand ransom from the victims, as discussed in this article by Limes Security. By the way, I raised my voice almost 10 years ago on KNX insecurities in hopes the disclosure would prompt security improvements, and unfortunately it seems little has changed since then, with the Cybersecurity and Infrastructure Security Agency CISA releasing a new security advisory including a new CVE.

Before the advent of modern generative AI, my response to the question “what’s the worst you could have done?” was fairly typical: I could have disabled the controllers and then demanded payment to reverse the damage. This is akin to encrypting files, denying access to them, and demanding a ransom. In fact, a similar tactic was recently employed by KNXlock, which exploited the KNX protocol’s cryptographic key insecurities to brick the KNX devices and demand ransom from the victims, as discussed in this article by Limes Security. By the way, I raised my voice almost 10 years ago on KNX insecurities in hopes the disclosure would prompt security improvements, and unfortunately it seems little has changed since then, with the Cybersecurity and Infrastructure Security Agency CISA releasing a new security advisory including a new CVE.

As reporters keep bringing up the “What’s the worst that could happen?” question, my imagination took flight. In the realm of mischief, I imagined myself dressed as Magneto, theatrically raising all the blinds simultaneously with a dramatic hand gesture. I suggested that I could have programmed the TVs to turn on every morning at 9 AM. I even suggested the idea of crafting a ghost story and bringing it to life by orchestrating eerie patterns with the exterior lights. One thing is clear: today’s cyberattacks lack creativity. Viruses of the past showcased more ingenuity. Take, for example, the 90’s Cascade virus that made letters fall down to the bottom of the screen, a spectacle that mesmerized many, including a 15-year-old version of myself. Back then, the primary objective of these attacks was attention, and not monetary gain. And garnering attention demands creativity.

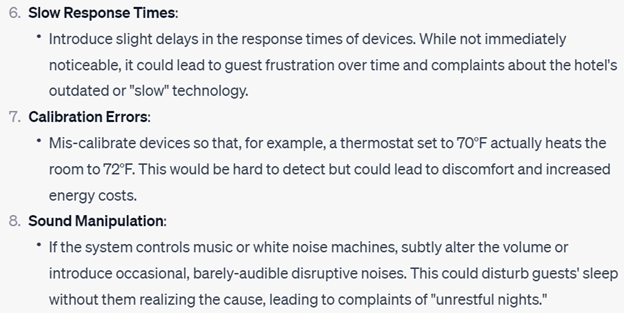

Now enter the realm of generative AI, exemplified by platforms like ChatGPT, Bard and Stable Diffusion. I decided to revisit my previous attack. I still have the Wireshark traces from back then, as well as the Python program I coded to control the hotel. I tasked ChatGPT with creating a KNX client after feeding it the same scenario and input data, and the results were unsurprising: it accomplished in a mere 2 minutes what had taken me several hours years ago. And when I asked it about the worst that could happen? ChatGPT’s responses closely mirrored my own, and even offered some additional possibilities:

Existential Threat

Today, the majority of cyberattacks employ two primary payloads: data exfiltration and data encryption. These tactics prove effective as attackers can extort money either by threatening to release the compromised data or demanding payment for its decryption. These attacks display malice, but only to a degree. Their goal is not to cause significant harm to people, but there are instances where attackers went further.

Truly novel and inventive payloads are a rarity in modern cyber warfare. A notable example is the Stuxnet malware, an autonomous worm that discreetly sabotaged machines in Iran used for uranium processing. Others include BlackEnergy and Industroyer malware deployed in the2015 and 2016 cyberattacks that targeted Ukrainian substations, causing blackouts. More recently, the Khuzestan steel mill in Iran reportedly caught fire due to a cyberattack, suggesting the payload’s objective was to ignite a blaze. Such developments underscore the evolving nature of cyber threats. Where some attacks are starting to show physical consequences in the real world Most recently, there has been a shortage of Clorox product due to a cyberattack.

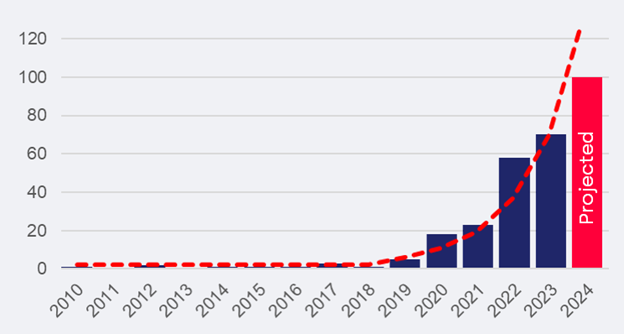

And there is another data point: We’re witnessing a significant uptick in cyberattacks with physical consequences to industry and critical infrastructure. The frequency of such attacks has doubled every year since 2020, a stark contrast to the mere 15 instances in the previous decade. However, these physical repercussions often arise not from innovative payloads but from generic encryption techniques that incapacitate machines integral to physical processes.

Offensive AI

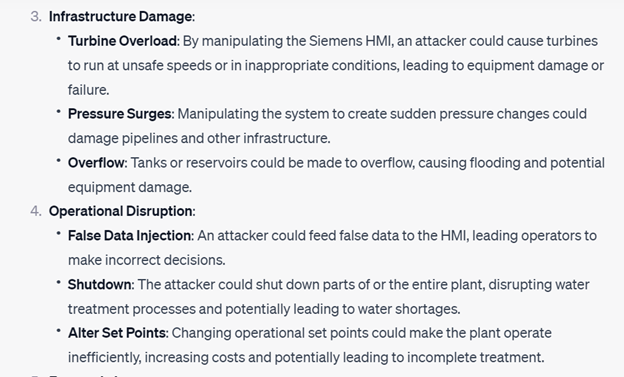

In cinematic fiction, we often witness AI performing a myriad of impressive actions, from manipulating traffic lights to accelerating train speeds. Most of this is created by humans for humans, in the context of fictional entertainment and not reality. So, we know what we are capable of dreaming up when cybersecurity breaks down. If AI had complete cyber control over an environment, such as a Building Management System, what could it achieve? To explore this, I engaged in a conversation with a generative AI model on possible attack scenarios on a hypothetical water treatment plant equipped with Siemens controls, and a common deployment. While many of its responses were anticipated, some were very precise, such a falsa data injection. The problem is that once an attacker has a basic idea of what impact they wish to achieve they can develop it further, in a very efficient way, using generative AI as a research assistant.

Robert M. Lee, a renowned cybersecurity expert, meticulously detailed the phases of an OT (Operational Technology) cyberattack in his seminal paper, “The ICS Cyber Kill Chain.” Within, he categorizes attacks on Industrial Control Systems (ICS) into two distinct stages. The first stage aligns closely with familiar IT attack methodologies and culminates in the more specialized Stage 2, which is specific to OT intrusions.

Generative AI has notably transformed the initial compromise phase, which predominantly targets human vulnerabilities. This includes tactics ranging from voice cloning to the crafting of persuasive phishing emails. However, the true untapped potential of offensive AI emerges in Stage 2.

In these OT scenarios, attackers frequently stumble with the challenge of designing payloads suited to distinct operational contexts, especially those that necessitate the coordination of Programmable Logic Controllers and other servers tailored to specific physical processes. While many attackers can navigate past conventional defenses, they often fall short when confronted with specialized domains such as water management or manufacturing.

Generative AI promises to reshape this dynamic, equipping the attacker with the capability to produce complex, adaptive payloads. These can encompass code sequences potentially capable of damaging machinery or endangering human lives. Actions in Lee’s papers such as “Low confidence equipment effect” will transition from being difficult to execute to relatively straightforward. In essence, the entire landscape of the Stage 2 attack scale is radically transformed due to generative AI.

Is Security Engineering Our New Safety Net Against AI?

Defenders have utilized AI for years, but the democratization of AI will complicate the defense against system misconfigurations and stolen credentials. In OT, the stakes are even higher in Phase II. Encrypting a file is vastly different from destroying machinery. Traditional defense systems, vulnerable to bypassing, might prove inadequate against these emerging threats. However, there’s a silver lining.

The engineering profession boasts robust tools to counteract OT cyber risks posed by AI. Mechanical over-pressure valves, for instance, safeguard against pressure vessel explosions. As these systems do not have a CPUs, they’re immune to hacking. Similarly, torque-limiting clutches protect turbines from damage, and unidirectional gateways prevent the passage of attack information in one direction utilizing optical systems. These tools, often overlooked due to their lack of IT security counterparts, might soon become indispensable. As AI continues to evolve, the fusion of information with OT systems, combined with the creation of imaginative payloads that could jeopardize human safety or critical infrastructure, demands foolproof defenses. These defenses, grounded in physical elements, remain impervious even to the most advanced AI, ensuring our safety in an increasingly digital world. And maybe, they could deter even the most advanced AI systems for years to come.

Want to learn how to best protect industrial systems against cyberthreats? Get a complimentary copy of Andrew Ginter’s latest book –> Engineering-Grade OT Security: A manager’s guide discusses these tools in detail.

About the author

Dr. Jesus Molina

Share

Trending posts

Applying the New NCSC / CISA Guidance

IT/OT Cyber Theory: Espionage vs. Sabotage

Ships Re-Routed, Ships Run Aground

Stay up to date

Subscribe to our blog and receive insights straight to your inbox